Flexible policy matching in Kuma 2.0

This article was first published on the Kong blog.

Kuma is configurable through policies. These enable users to configure their service mesh with retries, timeouts, observability, and more.

Policies contain three main pieces of information:

- Which proxies are being configured

- What traffic for these proxies this configuration applies to (i.e: inbound, outbound or even a subset of the directional traffic)

- The actual configuration to apply

Kuma 2.0 introduces a new matching API that’s more understandable and powerful. In this article, we explain why we’re doing this, how to use the new policy matching API, and what’s coming next.

Why change anything?

Up until now policies looked like:

apiVersion: kuma.io/v1alpha1

kind: Retry

mesh: default

metadata:

name: web-to-backend-retry-policy

spec:

sources:

- match:

kuma.io/service: web_default_svc_80

destinations:

- match:

kuma.io/service: backend_default_svc_80

conf:

http:

numRetries: 5

This policy will retry failed requests for any traffic from web_default_svc_80 to backend_default_svc_80.

But the current API has some issues.

It’s unclear whether a policy is inbound (applying to traffic coming in the service) or outbound (applying to traffic coming out of the service). This makes it unclear which proxy configuration a policy modifies.

In the example above without further context it’s not possible to say whether the proxy configuration of web_default_svc_80 or of backend_default_svc_80 is being configured.

On applied policies we can use the Inspect API but it doesn’t help when first creating a policy.

Composing policies is also challenging because of shadowing and ordering (see #2417). Shadowing happens when different policies have the same selector. This exists because we don’t currently have a way to merge policies.

Introducing targetRef

One of the primary goals of Kuma is ease of use. It became obvious that the policy matching API — whilst simple — wasn’t as powerful as we wanted it to be. Therefore, after some discussion we decided to rewrite it. This rewrite led to a lot of discussions and design proposals. Kubernetes Gateway APIs GEP-713 inspired the final design of the new policies that we detail below.

The central part of policy matching in Kuma 2.0 is what we call a targetRef. A targetRef is a logical group of dataplane proxies running in the mesh.

There are multiple “kinds” of targetRef:

Mesh: all the dataplanes of the mesh

targetRef:

kind: Mesh

MeshSubset: a subset of dataplane proxies across all services that have a set of tags

targetRef:

kind: MeshSubset

tags:

k8s.kuma.io/namespace: ns-1

MeshService: any dataplane proxy which belongs to a service (like all dataplanes with this kuma.io/service tag)

targetRef:

kind: MeshService

name: backend_ns1_svc_80

MeshServiceSubset: Like MeshService with extra tags to select a subset of all dataplanes. For example you could pick only dataplanes with the tag version: v2.

targetRef:

kind: MeshServiceSubset

name: backend_ns1_svc_80

tags:

version: v2

These targetRef are used in three possible places:

- Top level: the subset of proxies affected by this policy

- From: the subset of the incoming traffic to apply the configuration to

- To: the subset of the outgoing traffic to apply the configuration to

All policies have a common architecture, and depending on the policy type will have a from or a to section.

The architecture of a policy looks like:

apiVersion: kuma.io/v1alpha1

kind: MeshTimeout

metadata:

name: my-timeout

namespace: kuma-system # Policies are now namespaced.

labels:

kuma.io/mesh: default # optional mesh

spec:

targetRef: # (1) top Level targetRef, defines which dataplanes are getting their configuration modified by this policy

kind: MeshSubset

tags:

with-timeout: v1

to: # a list of configuration to apply to a subset of the outgoing traffic

- targetRef: # (2)

kind: MeshService

name: outgoingServiceA

default: # actual configuration

http:

requestTimeout: 5s

- targetRef: # (3)

kind: MeshService

name: outgoingServiceB

default:

http:

requestTimeout: 2s

from: # a list of configuration to apply to a subset of the incoming traffic

- targetRef: # (4)

kind: Mesh

default: # actual configuration

http:

requestTimeout: 1s

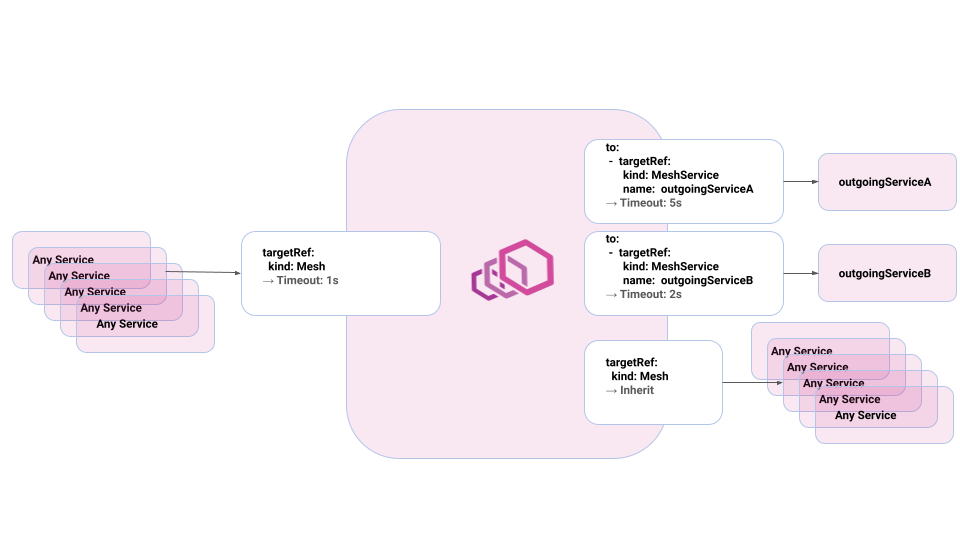

With this top-level targetRef (1) the policy only affects data plane proxies with the tag with-timeout=v1.

When applied, it will set different timeouts for incoming and outgoing traffic:

- Requests for

outgoingServiceA(2) have a 5 second timeout. - Requests for

outgoingServiceB(3) have a 2 second timeout. - All other outgoing requests will inherit the default timeout.

- On the receiving side (4), we’ll have a timeout of 1 second regardless of the source service.

The following schema summarizes this:

As you can see with this new policy it is easy to understand:

- Which data plane proxies are getting configured thanks to the top level targetRef

- What traffic for these proxies is affected thanks to from and to targetRef

- What actual configuration to apply thanks to the default inside the

toor thefrom

Merging

Now that we’ve shown for a single policy, we’ll describe what happens when many policies of the same type are at play.

This is where Kubernetes Gateway API proposal GEP-713 was heavily used as an inspiration. All targetRef kinds are ordered using the following rules:

- Mesh > MeshSubset > MeshService > MeshServiceSubset

- At the same level we use lexicographic order on name

So with many policies when building the data plane proxy configuration we:

- List all policies of a type

- Prune policies with a top level targetRef that doesn’t match the data plane proxy

- Order policies according to the total order defined above

- Concatenate all arrays inside from and to

- Merge configuration

For example, with these two policies:

spec:

targetRef:

kind: Mesh

from:

- targetRef:

kind: MeshService

name: incomingServiceB

default:

http:

requestTimeout: 5s

- targetRef:

kind: MeshService

name: incomingServiceC

default:

http:

requestTimeout: 10s

idleTimeout: 5s

—--

spec:

targetRef:

kind: MeshSubset

tags:

with-timeout: v1

from:

- targetRef:

kind: MeshService

name: incomingServiceA

default:

http:

requestTimeout: 3s

- targetRef:

kind: MeshService

name: incomingServiceC

default:

http:

requestTimeout: 2s

The resulting configuration would be equivalent to:

spec:

from:

- targetRef:

kind: MeshService

name: incomingServiceB

default:

http:

requestTimeout: 5s

- targetRef:

kind: MeshService

name: incomingServiceA

default:

http:

requestTimeout: 3s

- targetRef:

kind: MeshService

name: incomingServiceC

default:

http:

requestTimeout: 2s

idleTimeout: 5s

MeshSubset has higher priority than Mesh thus merging the two configurations for incomingServiceC:

- timeout is 2 seconds instead of 10

- inherit the 5 second ildeTimeout

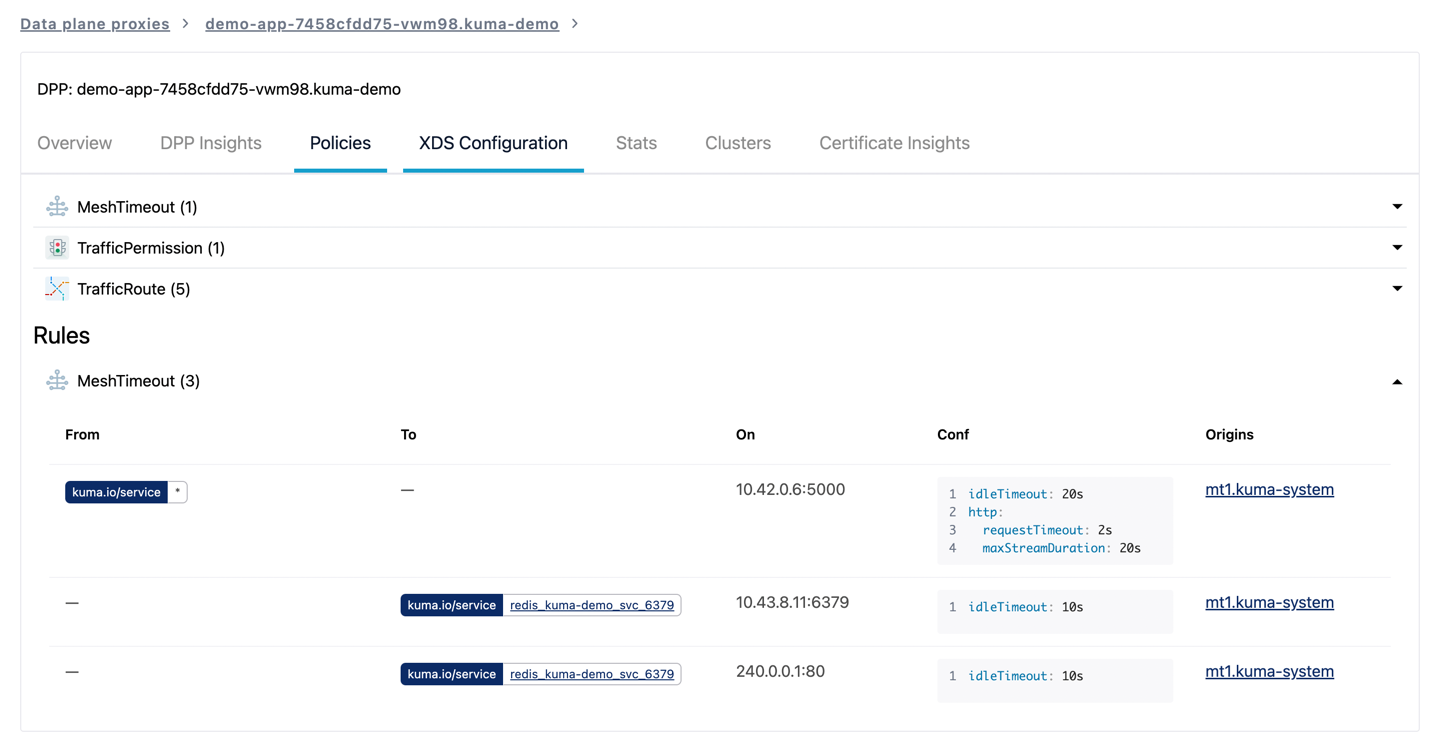

These new policies come with a new rules API which is getting integrated in the GUI in 2.1:

What’s next?

The first policies with this API landed in Kuma 2.0 are MeshTrafficPermission, MeshAccessLog, and MeshTrace. Other policies will roll out in Kuma 2.1 and we maintain an equivalence table in the docs. You can check the progress in the umbrella github issue #5194. All designs are MADRs and your opinion and contributions are very welcome.

We are using this to help the Kubernetes GAMMA initiative by sharing our experience.

These new policies are Beta and you should try them out. Yet, mixing new and old policies of the same type is currently undefined behavior. Migration strategies and tooling will come in future releases of Kuma.

This post described what policies are, the shortcomings of the existing API, and introduced its successor. We hope you’ll enjoy this improvement. If you have any questions feel free to ask the Kuma Community on Slack or join the monthly community call.

Get Community Updates

Sign up for our Kuma community newsletter to get the most recent updates and product announcements.

Thank you!

You're now signed up for the Kuma newsletter.

Whoops!

Something went wrong! Please try again later.